⚡NVIDIA Chips to China. “Damn If You Do, Damn If You Don’t.”

Plus: Meta goes from open-source to a closed, profit-driven AI strategy.

Meta shifts from open to closed-source AI in pursuit of profit, marking a major strategic reset. Meanwhile, space is emerging as the next frontier for data center energy as companies begin launching infrastructure beyond Earth. And the Nvidia-to-China export dilemma continues, a true “damn if you do, damn if you don’t” moment for U.S. tech policy.

Let’s dive in and stay curious.

NVIDIA Chips to China. “Damn If You Do, Damn If You Don’t.”

🧰 AI Tools - Open Source Models

The AI Data Race Just Left the Planet. Starcloud Pioneers Orbital Compute

🛠️ AI Jobs Corner

Meta goes from open-source to a closed, profit-driven AI strategy.

📚 AI Learning Resource - Open Source Classes

Subscribe today and get 60% off for a year, free access to our 1,500+ AI tools database, and a complimentary 30-minute personalized consulting session to help you supercharge your AI strategy. Act now as it expires in 3 days…

📰 AI News and Trends

Pinterest Saving 90% on AI Models Through Open Source

Google Announces AI Partnerships Pilot with News Publishers

Shopify merchants can now sell products through AI chatbots

Nvidia builds location verification tech that could help fight chip smuggling

Other Tech News

Trump says Warner Bros Deal Should Include CNN, complicating things for Netflix

U.S. wants to mandate social media checks for tourists

Instagram's new feature lets users pick topics they want to see more or less of on their explore page.

YouTube TV to Launch More Than 10 Cheaper Genre-Specific Plans, Including a Sports Tier With ESPN Unlimited

Musk’s fortune would more than double on a $1.5 trillion SpaceX IPO

Meta goes from open-source to a closed, profit-driven AI strategy.

Following the failure of its Llama 4 model, Mark Zuckerberg has taken direct control of Meta’s AI division, replacing key leadership, including the departure of Yann LeCun and the hiring of Scale AI’s Alexandr Wang. The company is investing $600 billion to develop “Avocado,” a closed-source proprietary model trained on rival data from Google and OpenAI. This shift aims to monetize AI and compete directly with top rivals, though it has caused significant internal turmoil and raises regulatory concerns regarding safety.

Pros of Open Source AI

Rapid Innovation & Bug Fixing: When code is public, thousands of external developers can improve it, fix bugs, and build plugins faster than a single internal team. This was Meta’s original strategy with Llama.

Industry Standardization: Open models often become the “standard” on which other tools are built. If everyone uses your architecture, you control the ecosystem (similar to Google’s Android).

transparency & Trust: Researchers and governments can audit the code for bias and safety flaws, creating more trust than a “black box” proprietary model.

Lower Barrier to Entry: It democratizes AI, allowing smaller companies, students, and researchers to use powerful tools without paying massive licensing fees.

Cons of Open Source AI (Why Meta is leaving it)

Hard to Monetize: It is difficult to sell a product that you are also giving away for free. As the text suggests, Meta is moving to a “profit-driven” model because open source wasn’t generating direct revenue.

Aiding Competitors: When you release your model, your rivals can use it to catch up. In the text, Meta is now doing the reverse, using rival models to train their own, showing how value leaks when models are open.

Loss of Control: Once a model is released, the company cannot control how it is used (e.g., for spam, deepfakes, or by geopolitical rivals).

Capital Intensity vs. Return: Developing frontier models costs billions (or $600B in the text). Justifying that spend to investors is impossible if the resulting asset is given away for free rather than kept as a proprietary trade secret.

🛠️ AI Jobs Corner

Apply Today - Open Positions.

First-Line Supervisors of Productions and Operating Workers

Computer and Information Systems Managers

NVIDIA Chips to China. “Damn If You Do, Damn If You Don’t.”

Washington unexpectedly lifted export controls on Nvidia’s advanced H200 chips, forcing Beijing to choose between supporting its domestic chipmakers or accelerating AI development with U.S. hardware.

The shift comes as the U.S. holds a roughly 13:1 compute advantage over China, a lead analysts warn could shrink quickly if tens of billions in GPUs flow into the country. But if the US decides to continue the ban, China will develop its own chips and accelerate innovation, and still catch up without Nvidia getting any financial benefits. Some say might as well export chips, make some money in the process, and perhaps install surveillance technology to learn how they are developing AI.

National security experts call the move a “disaster,” arguing it boosts China’s military and intelligence capabilities at a time when U.S. agencies say they “cannot get enough chips” themselves. Major tech companies like Microsoft and AWS back restrictions such as the GAIN Act, which prioritizes U.S. demand, but Nvidia, facing declining reliance from U.S. hyperscalers, pushed aggressively for the policy change. Critics warn this could accelerate China’s frontier models like DeepSeek and Qwen, undermine export controls, and erode long-term U.S. AI dominance.

📚 AI Learning Corner

Open-source learning resources

1. Efficiently Serving LLMs - The Best “Crash Course” for Concepts. Focuses on performance optimizations like KV Caching, Continuous Batching, and Quantization, the key methods for saving money on inference.

2. vLLM Documentation - The Best “Engineer’s Bible” for Production - This is the definitive technical guide for the serving engine that provides the high-throughput and low-latency you need for a production environment. Look for the “Serving an LLM” section.

3. Ollama - The Best “Try it Now” Tool (Laptop to Server) -The simplest way to start running models like Llama 3, DeepSeek, and Mistral with a single command line for rapid prototyping and testing.

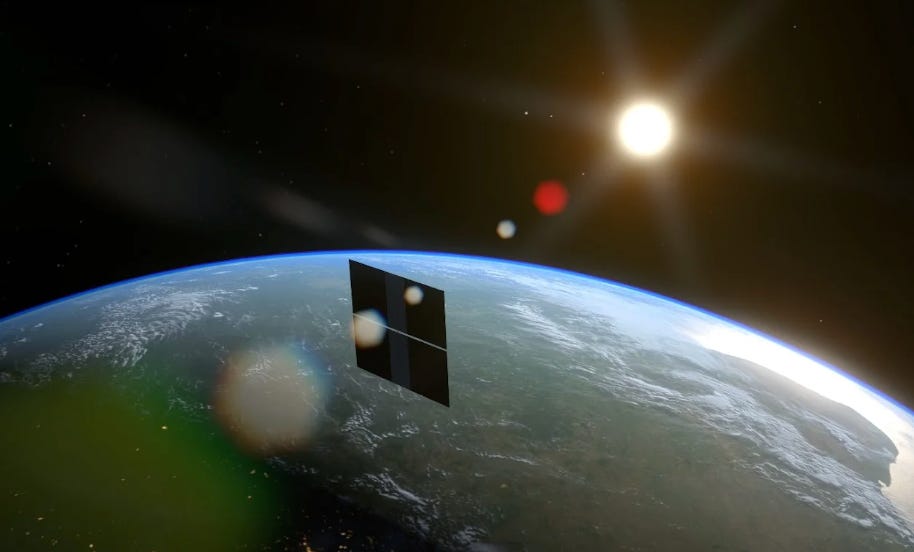

The AI Data Race Just Left the Planet. Starcloud Pioneers Orbital Compute

The next frontier for artificial intelligence is space. Starcloud, an Nvidia-backed startup, has successfully trained and run an AI model from orbit for the first time, marking a significant milestone in the race to build data centers off-planet.

Starcloud’s Starcloud-1 satellite was launched with an Nvidia H100 GPU, a chip reportedly 100 times more powerful than previous space computing hardware. The satellite successfully ran Google’s open-source LLM, Gemma, in orbit, proving that complex AI operations can function in space.

The motivation behind this move is the escalating crisis of terrestrial data centers, which are projected to more than double their electricity consumption by 2030. Moving computing to orbit offers powerful solutions:

Near-Limitless Energy: Orbital data centers can capture constant, uninterrupted solar energy, unhindered by Earth’s day-night cycle or weather.

Cost Efficiency: Starcloud CEO Philip Johnston claims orbital data centers will have 10 times lower energy costs than their Earth-based counterparts.

Real-Time Intelligence: Running AI on the satellite enables instantaneous analysis of satellite imagery, crucial for spotting wildfires, tracking vessels, and military intelligence without the delay of downloading massive data files.

Starcloud is now planning a 5-gigawatt orbital data center, a structure that would dwarf the largest power plant in the U.S., powered entirely by solar energy.

The New Space Race: Key Players in Orbital AI

Starcloud’s success has intensified a high-stakes competition among the world’s most powerful tech and space companies, all aiming to capitalize on the promise of scalable, sustainable AI compute.

Google (Project Suncatcher): A “moonshot” initiative to deploy solar-powered satellite constellations equipped with their custom Tensor Processing Units (TPUs). They aim to link these satellites using high-speed optical (laser) links, with two prototype satellites planned for launch by early 2027.

SpaceX: Plans to scale up their next-generation Starlink V3 satellites to double as orbital data centers, leveraging their low launch costs and high-capacity laser links. CEO Elon Musk has stated this could become the “lowest-cost AI compute option” within a few years.

OpenAI (Sam Altman): The CEO has explored acquiring or partnering with rocket manufacturers (like Stoke Space) to establish orbital data centers, driven by the belief that AI’s exponential energy demands will soon require infrastructure beyond Earth.

Lonestar Data Holdings: Focused on a different celestial body, this company is working to put the first-ever commercial lunar data center on the Moon’s surface.

Aetherflux: A startup founded by former Robinhood co-founder Baiju Bhatt, with a target to deploy its own orbital data center satellite in early 2027.

The technical hurdles remain, including radiation, maintenance, and space debris, but with the biggest names in tech betting on space, the future of AI may soon be floating above our heads.

🧰 AI Tools of The Day

Best Text Open Source Models for efficiency and savings.

Llama 3 / 3.2 Llama 3.2 3B or 8B - Meta’s Llama series is the industry standard. The “3.2” versions are multimodal (can see images) and small enough to run on cheap hardware or even edge devices.

Mistral / MixtralMistral 7B / NeMo - Extremely efficient. Mistral models often punch above their weight class, meaning a 7B parameter model outperforms older models 5x its size, saving massive compute costs.

DeepSeekDeepSeek-V3 / R1The new cost king. - DeepSeek (especially their “MoE” or Mixture-of-Experts models) is currently offering GPT-4 class performance at a fraction of the training and inference cost.

QwenQwen 2.5 (7B or 14B) - Excellent coding and math capabilities. If your app involves structured data or logic, this often requires less “prompt engineering” overhead than Llama.

Great roundup of the geopolitical and technical shifts happening right now. The NVIDIA export paradox is really a classic catch-22, if you block chips China builds domestic alternatives and nvidia loses market share, if you allow exports you potentally fuel rival AI capabilities. The orbital data center angle with Starcloud is wild tho, near-limitless solar power plus zero cooling costs could genuinely reshape infrastructure economics if they crack the radiation and maintennance challenges.